AI Expert Says The Technology Will Destroy Humanity

Artificial Intelligence (AI) is an exciting new technology that has people everywhere wondering how it will affect the world we live in. Some think it will improve the safety and well-being of humans, while others think it will cause an eventual apocalypse. Eliezer Yudkowsky, the well-known controversial AI theorist, recently shared that he thinks the technology will destroy humanity, according to Futurism.

While Eliezer Yudkowsky has been telling the world for decades that we need to be careful with AI, he is now saying “I think we’re not ready, I think we don’t know what we’re doing, and I think we’re all going to die.” Earlier this year in an op-ed for Time, the artificial intelligence researcher suggested we completely halt development of AI and that we should destroy a rogue data center.

Those who were laughing at his concerns years ago, might soon be coming to him for advice on how to stop the AI apocalypse.

“I think we’re not ready, I think we don’t know what we’re doing, and I think we’re all going to die.”

Eliezer Yudkowsky

The biggest concern that Yudkowsky has is that we don’t completely understand the technology we have created. Taking OpenAI’s ChatGPT-4 as an example, we can’t really get inside the technology to see the sort of mathematics going on behind the scenes. Instead, we can only theorize on what is going on inside.

More Experts Concerned About AI Than Ever Before

Yudkowsky is not alone in this concern. In fact, over 1,100 artificial intelligence experts, CEOs, and researchers, including Elon Musk and the “godfather of AI” Yoshua Bengio, are calling for a temporary moratorium on the advancements of AI. This will give them time to assess just how powerful AI can become and decide whether or not we need to start setting limitations on the technology.

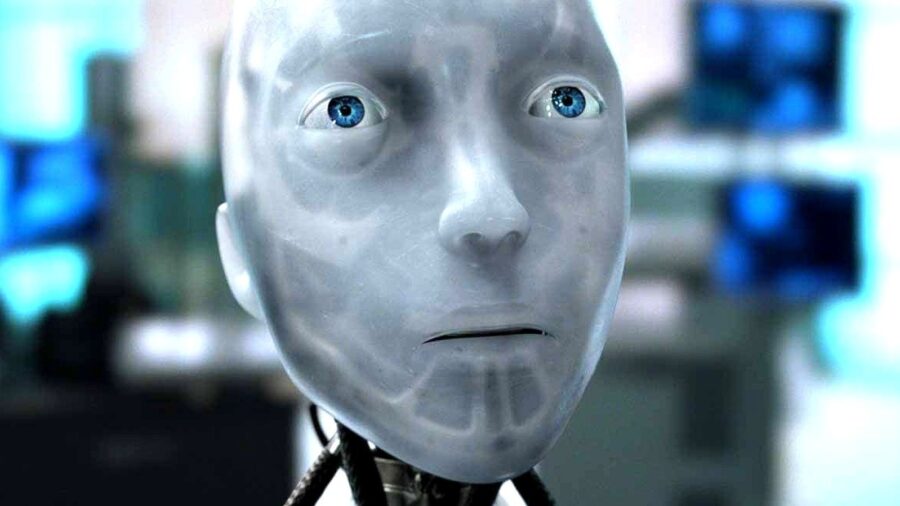

The AI experts want to create safety protocols that will be monitored by outside experts to help ensure the technology does not get out of control. The goal is to create AI technology that is safe, trusted, accurate, and most importantly, loyal. This will help to ensure humanity is not taken over by robots like the countless movies we have seen, like I, Robot, Blade Runner, and the entire Terminator franchise.

Earlier this year, the artificial intelligence researcher suggested we completely halt development of AI.

Unfortunately, not everyone is on this same page. There are currently employees at AI labs hard at work trying to create the most powerful AI technology that will perform better than their competitors. At the rate they are going, they could create something that is too powerful that they cannot even predict or understand it, which could lead to the downfall of humanity.

The other impending risks associated with AI are the possibilities of mass plagiarism, its impact on the environmental footprint, and its ability to take jobs away from hard-working humans. Most recently, actors and writers in Hollywood have gone on strike to try and protect their jobs from the threat of AI. In the near future, we could see technologies like ChatGPT writing the next big script in Hollywood and replacing real human actors with CGI likenesses.

While it’s entertaining to wonder what the AI apocalypse could look like, it is equally as important to listen to some of the doomsday believers like Yudkowsky, because they just might be on to something.