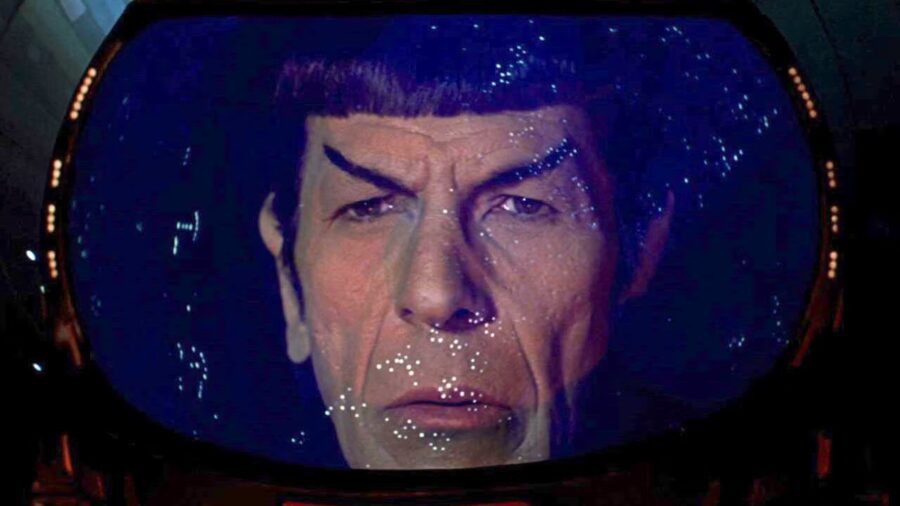

Star Trek Makes AI Better At Math

As researchers continue to grapple with the various ways they can feed prompts into Large Language Models (LLM), a startling discovery was made. By framing prompts using Star Trek terminology as a reference point, the AI’s ability to solve simple math problems improved compared to other prompts that were input. Based on the current findings, you can live long and prosper if you know how to approach LLMs in a way that feeds off a particular model’s strengths.

Don’t Assume It Works All The Time

Does this mean that Star Trek references should be input into every AI chatbot to achieve optimal results? That’s not what researchers Rick Battle and Teja Gollapudi necessarily posit in their paper, “The Unreasonable Effectiveness of Eccentric Automatic Prompts.” Battle and Gollapudi originally set out to test their theory involving the concept of positive thinking in LLMs, and just so happened to stumble upon this phenomenon.

Captain’s Log

In other words, output isn’t a reflection of what you ask a chatbot but how you ask it. Working with Mistral-7B5, Llama2-13B6, and Llama2-70B7 as the three primary LLMs in their study, Battle and Gollapudi input 60 human-written prompts full of positive reinforcement and based the success of their prompts on the output they received. When they fed a mathematical prompt into Llama2-70B7 suggesting that it uses Star Trek terminology like “Captain’s Log, Stardate” in its answer, the AI model surprised them with a high quality output that was superior to other prompts that were input.

Star Trek’s Focus On Logic

The main takeaway is that although Star Trek references led to better results from the AI model they were using, it doesn’t mean that Star Trek-based prompts will always yield desirable results in every context. The prevailing theory is that the particular dataset that the Llama2-70B7 was trained with possibly has a deeper connection to Star Trek, and mirrors the logic found in the literature that resides in its database. Given how much of an emphasis Star Trek has on logic and reason, it’s reasonable to assume that asking the chatbot to mimic the language of a Starfleet commander gives it a firmer grasp on the logical question that’s input.

Star Trek Isn’t A Universal Fix

AI models aren’t capable of using their own logic and reason when generating responses, but rather rely on probability based on the information that’s available to them. Catherine Flick of the Staffordshire University, UK, suggested to New Scientist that although Star Trek terminology worked in this one instance, different AI models may not deliver the same results, as they rely on their own unique datasets. But now that this revelation has been made, we can have a lot of fun figuring out how to make an LLM write a dissertation based on Seinfeld references, or at least in theory we could.

More Linguistic Experiments Are Inevitable

Though we’ve still got a long way to go in figuring out how to best use LLMs to our advantage in an effort to work more efficiently, this initial research is promising. The Star Trek prompt will probably be the first of many linguistic experiments that researchers plug into AI chatbots with varying results, but the same logic can be applied across the technology to provide us a better understanding as to how AI actually works.

Source: arXiv