AI Apocalypse Kill Switches Could Save Humanity

The University of Cambridge has proposed using remote kill switches and lockouts to mitigate a potential AI apocalypse. Similar to those that prevent unauthorized nuclear weapon launches, these switches will be fitted directly into the underlying hardware. The proposal featured contributions from various academic institutions, including several from OpenAI.

Giving Humans The Final Say

The theory suggests that the integration of kill switches directly into the silicon of AI systems will prevent malicious use. In scenarios where an AI apocalypse is imminent, regulators could disable their operation. Expanding on this concept, the hardware could potentially be self-disabled or be remotely disabled by watchdogs.

Like A Damaged Pringles Can, We’d Need New Chips

Modified AI chips might have the capability to support such actions, enabling remote verification by regulators of their legitimate operation and the option to cease functioning. In a more detailed proposal addressing a potential AI apocalypse, the researchers advocate for the remote control of processor functionality by regulators using digital licensing.

Specialized co-processors on the chip could hold a cryptographically signed digital “certificate,” with updates to the use-case policy delivered remotely via firmware updates. The on-chip license could be periodically renewed by the regulator while the chip producer administers it. An expired or unauthorized license would render the chip non-operational or reduce its performance.

Kill Switches Have Their Own Dangers

While this approach theoretically allows watchdogs to swiftly respond to abuses of sensitive technologies or an AI apocalypse by remotely cutting off access to chips, the authors caution that implementation carries risks. Incorrect implementation could make such a kill switch a target for cybercriminal exploitation.

Crippling The AI

Another proposal suggests requiring multiple parties to approve potentially risky AI training tasks before they are deployed at scale. Drawing a parallel with nuclear weapons’ permissive action links designed to prevent unauthorized launches, this mechanism would necessitate authorization before an individual or company could train a model over a certain threshold in the cloud.

However, the researchers acknowledge that this precautionary approach could hinder the development of beneficial AI by imposing stringent controls. The argument is made that while the consequences of using nuclear weapons are clear-cut, AI applications may not always fall into black-and-white categories – such as what constitutes an AI apocalypse.

AI Needs PR?

For those finding the concept of an AI apocalypse too dystopian, the paper devotes a section to reallocating AI resources for the greater good of society. The idea of “allocation” suggests that policymakers could collaborate to make AI more accessible to groups less likely to misuse it, offering a more positive perspective on the responsible use of AI.

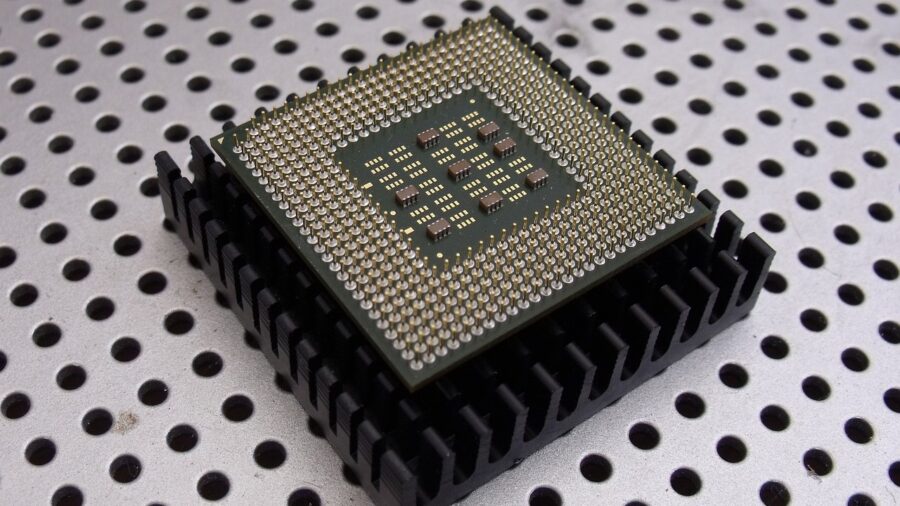

It’s The Hardware

The paper, called Computing Power and the Governance of Artificial Intelligence, also states that the optimal strategy to curb potential misuse of AI models is through the regulation of the hardware that forms their foundation. The researchers argue that AI-relevant compute, a crucial component in training massive models exceeding a trillion parameters, offers a practical point of intervention.

The hardware is detectable, excludable, and quantifiable, with a concentrated supply chain, making it easier to regulate. The most advanced chips are produced by a small number of companies, allowing policymakers to control their sale and distribution. The researchers propose the establishment of a global registry for AI chip sales to track the lifecycle of these components across borders.