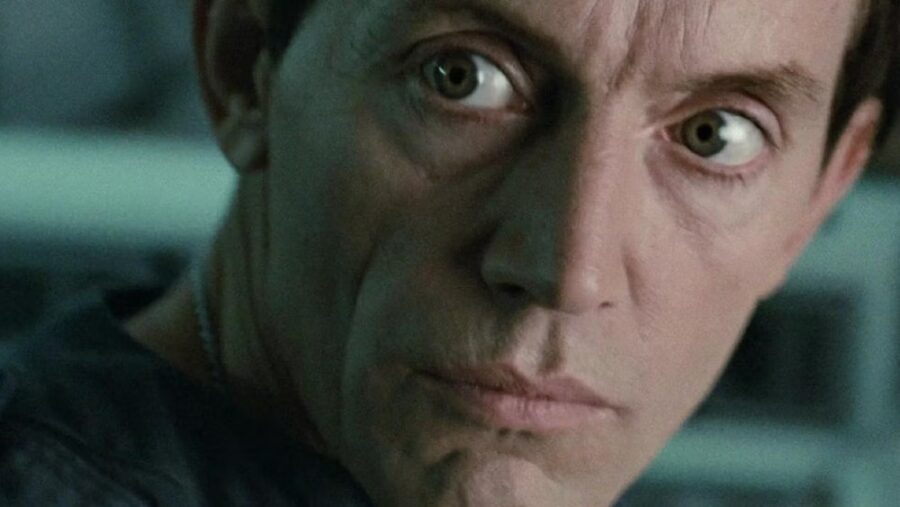

Mark Zuckerberg AI Terrifies Experts

Mark Zuckerberg, the founder and CEO of Meta, recently announced his intention to develop an AI to rival humans. He also plans to make this advanced AI system open source so that is accessible to developers and the public alike. While Zuckerberg sees this as a step towards benefiting humanity, experts and academics worldwide are concerned about the risks.

Zuckerberg’s Plans For AI

In a Facebook post, Mark Zuckerberg emphasized the necessity of building an Artificial General Intelligence (AGI) system, often referred to as a theoretical AI, for the next generation of tech services. “This technology is so important, and the opportunities are so great that we should open source and make it as widely available as we responsibly can, so everyone can benefit,” he wrote.

The Meta Chief’s Critics

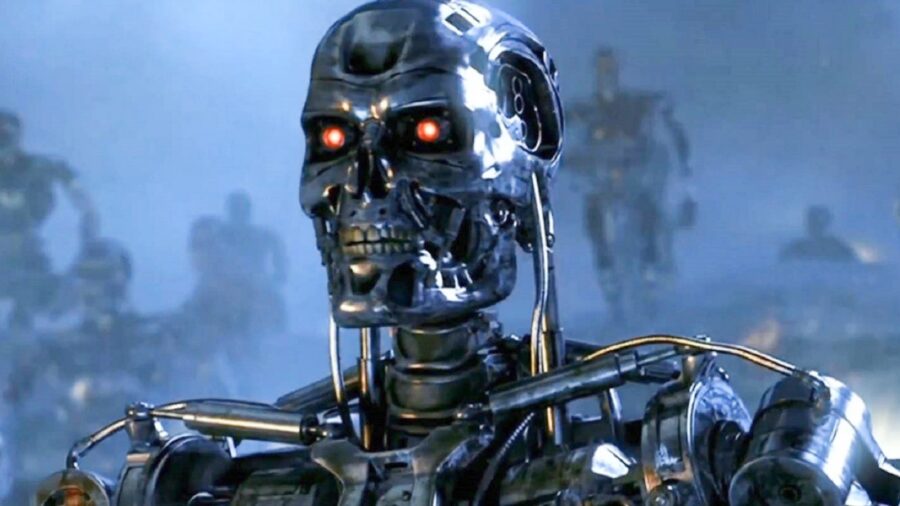

Opposing Mark Zuckerberg is Dame Wendy Hall, a computer science professor at the University of Southampton and a UN AI advisory member. Hall described the idea of open source AGI as “really very scary” and criticized the tech mogul for considering it. “In the wrong hands, technology like this could do a great deal of harm. It is so irresponsible for a company to suggest it,” she said.

Another expert, Dr. Andrew Rogoyski from the Institute for People-Centred AI at the University of Surrey, argued that decisions about open-sourcing AGI should not rest solely in the hands of a tech company like Meta. “These decisions need to be taken by international consensus, not in the boardroom of a tech giant,” Rogoyski said.

Zuckerberg’s Other AI

Mark Zuckerberg’s previous decision to open source the Llama 2 AI model faced criticism, with Hall likening it to “giving people a template to build a nuclear bomb.” Llama 2 was released as a mostly open-source project in July 2023. As a large language model, it can generate text and provide detailed guidance on various topics, including how to create a biological weapon like anthrax.

Meta is currently training Llama 3, which will have code-generating capabilities and more advanced reasoning and planning abilities. Despite Mark Zuckerberg’s reassurance that Meta would approach open-sourcing his new AI responsibly, skepticism remains. Fortunately, it will likely take several years before an AGI is available on a large scale, which will allow time to establish appropriate regulatory systems.

When Will The New AI Emerge?

Mark Zuckerberg did not provide a specific timeline for the development of his new AI. However, he mentioned that Meta has invested heavily in infrastructure for creating new, updated AI systems, including a significant stockpile of AI processing chips. Additionally, Zuckerberg mentioned that work on Llama 3 is currently in progress.

Along with Mark Zuckerberg’s AI, the California-based company OpenAI, responsible for creating ChatGPT, is actively developing its own Artificial General Intelligence to rival the human mind. Demis Hassabis, the head of Google’s AI division, Google DeepMind, has also suggested that AGI could be achieved within the next decade.

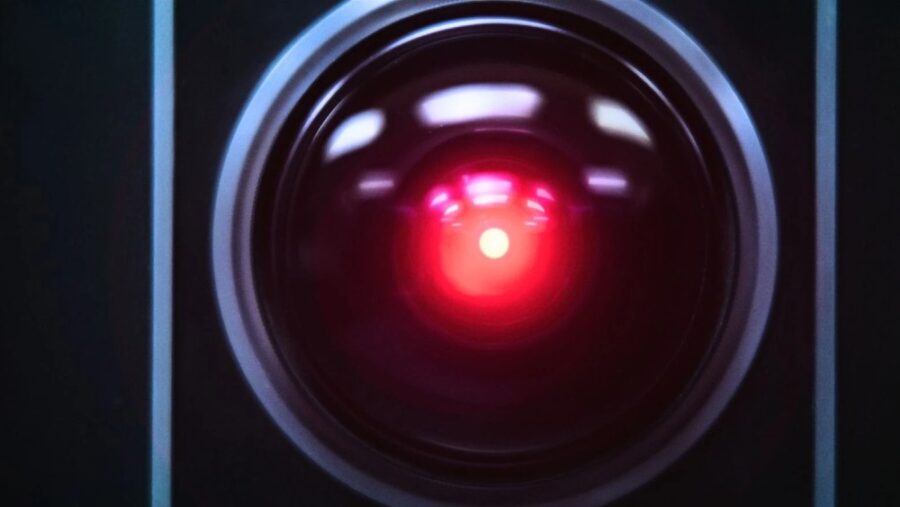

The Inherent Risks

The dangers of Mark Zuckerberg’s highly advanced AI lie in the potential loss of control. As AI systems become increasingly sophisticated, it may lead to scenarios where machines operate beyond our understanding and become unmanageable. The biggest concern is the development of AGI, which could autonomously make decisions, learn, and adapt in ways that may not align with human values.

The risks include AI systems making biased or unethical decisions, exacerbating societal inequalities, or causing economic disruptions by automating jobs at an unprecedented scale. Moreover, advanced Mark Zuckerberg’s AI could be exploited for malicious purposes, posing threats to cybersecurity and privacy. Issues related to accountability and transparency may also arise when mistakes are made.

Source: Guardian