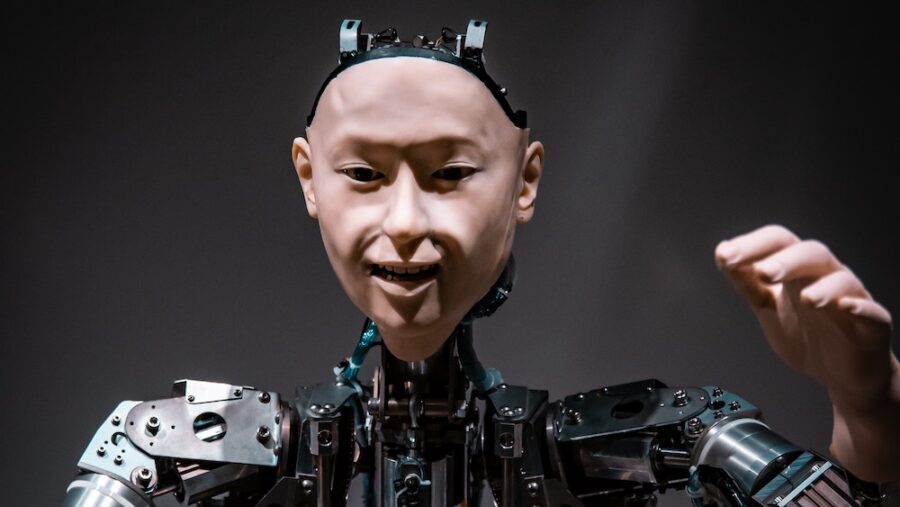

Top AI Researcher Says Emerging Technology Will End Humanity

If you’re in the mood for bad news (who isn’t?), read on: a leading AI safety scientist and director of the Cyber Security Laboratory at the University of Louisville, Roman Yampolskiy, issued a dire warning. The expert asserted that to a near certainty—as in, a 99.999999 percent chance—AI will end humanity.

Cheery stuff.

Not The Popular View?

Important to note, however, is that the grim prognosis sharply contrasts the views of other big-name figures in the AI community. This reflects the significant divergence of opinions when it comes to the perceived risks associated with advanced AI tech.

A concept called “p(doom)” lies at the heart of this discussion, however outlandish it sounds; p(doom) represents the likelihood of AI eventuating catastrophic outcomes—eventualities like the technology taking over the planet or triggering a nuclear war. As can perhaps be expected, expert opinions regarding the probabilities implied by p(doom) vary widely.

Less Likely Than An Asteroid Hitting Earth?

In other words, not every scientist believes AI will end humanity or maintains the hypothesis with as much confidence as Yampolskiy.

For instance, Yann LeCun, an AI pioneer and in-house AI expert at Meta, voices an optimistic take. In his eyes, the risk remains less than .01 percent—less than the likelihood of an asteroid impact.

Still Chances It Ends Badly

However, before you jump for joy, jubilant that we have avoided the sci-fi catastrophe Yampolskiy forecasts, consider that other leading researchers harbor more concerning assessments.

For example, Geoff Hinton, the famed British-Canadian computer scientist and AI luminary, propounds a ten percent chance AI will end humanity within the next 20 years. Yoshua Bengio, another Canadian scientist and one of the field’s veritable rockstars, puts the risk at 20 percent.

Twenty percent is pretty scary.

Elon Musk Recognizes Issues

Given how relevant these estimations are, the discussion they inspired reached a broader audience at the recent Abundance Summit; there, Elon Musk—heard of him?—took part in the unmodestly named “Great AI Debate.”

Musk recognized some degree of risk that AI would end humanity, vocalizing his alignment with Hinton and Bengio’s assessments. Nonetheless, the rocket-launching, car-producing tycoon emphasized a likely positive outcome, one more predictably instantiating instead of the negative (terrifying) scenarios.

Halting AI Development?

But Yampolskiy strongly disagrees with Musk and the more optimistic scientists. Indeed, the University of Louisville’s own expert considers Musk’s stance too conservative and advocates for a total halt to AI development.

In Yampolskiy’s eyes, such a stoppage is the only surefire way to avoid uncontrollable risks associated with more advanced systems.

For his part, Musk countered this gloomy prognosis by underscoring the importance of ethical AI development. He specifically specified the importance of not programming AI to lie, even if the truth is unpleasant. Interestingly, Musk voiced that this principle is critical in mitigating some of the existential risks AI could pose–namely, that it will end humanity.

AI Community At Odds?

Ultimately, the disparity in views underscores a fundamental debate in the AI community—one worth paying attention to.

At its heart lies an urgent question: how can we balance AI’s incredible potential benefits against the existential risks it might represent?

It’s certainly disconcerting that the world’s leading experts disagree on the answer to this question.

Source: TechRadar